Initial Bulk Data Import

For data that has already been collected in Libreoffice Calc / Microsoft Excel, a bulk data import macro is available. The following procedure can be used with an empty database or with one that already contains records.The import procedure makes sure that existing records in the database are not overwritten.

Backup, Backup, Backup!

Before proceeding, backup the database and ensure that the restore process is working. Manually removing bulk imported data is often not fun! For details see the instructions provided on the backup page

Text Formatting

The following formatting of text in individual cells is preserved during the data transfer:

-

Bold and italics text

-

Character color (but not background color!).

Thoughts on Non-ASCII Characters

Non-ASCII characters are used in many languages while most string functions in PHP used in the backend only support single byte ASCII characters. To compensate, strings in DRDB are always HTML encoded and special characters (e.g. German 'Umlaute' such as 'ä,ü,ß', French accented characters such as 'é,â', etc.) are stored as HTML escape sequences. Example: ö = ampersand + ouml;.

Note: This means that the number of characters in a string variable is higher than the actual number of characters if special characters are used. Also, formatting such as bold, italics, etc. is embedded in strings as HTML tags, which further increase the string length.

Empty Lines

-

Lines in the Calc/Excel document can be empty. This will be detected and the line will be ignored during the bulk import process. In other words, no empty records are created.

-

Note: The Json formatted input file DOES contain the empty lines, records with all fields set to

<p></p>. This is o.k., the bulk import process will filter them out on the server.

Checks Performed Before Data Is Imported

For the bulk data transfer, the following requirements must be fulfilled by the data in the Calc/Excel document:

-

The number of the columns in the document and the number of fields in the database must be identical.

-

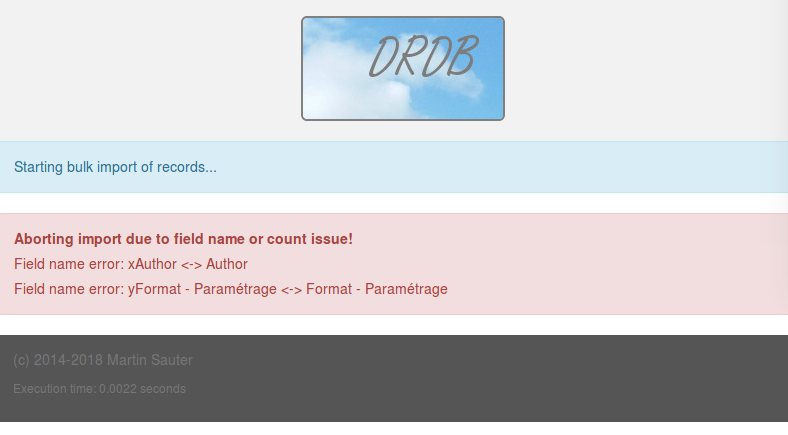

The first (horizontal) row of the Calc/Excel sheet must contain the column names and they must exactly match the field names in the database.

-

The order of the Calc/Excel column names must be identical to the order of the database fields as configured in /config/database-structure.php.

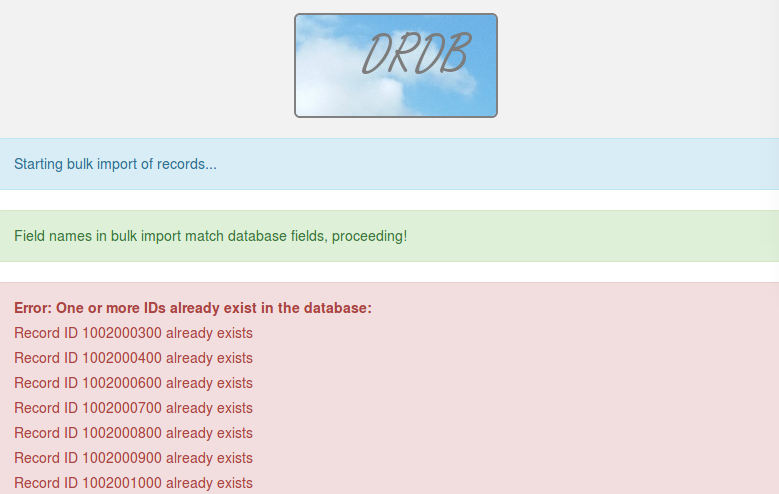

All checks are performed before bulk import starts. If any of these conditions is not met, the import process is aborted and no data at all is written to the database. It's an all or nothing approach. Example: If even one of the record IDs given in the import data already exists, the import process is aborted and NO records will be imported.

Bulk export from Calc / Excel with a Libreoffice Macro

-

A Libreoffice macro is provided as part of this project in Example Database.odt (Libreoffice Calc document) which converts tabulated data into JSON format (Javascript Object Notation, see Wikipedia) that can be copied/pasted into the bulk import page of the DRDB database.

-

If your data is not in Libreoffice calc but in Excel format, open the Excel file in Libreoffice Calc and save it in Libreoffice format. This is required to save Libreoffice macros in the document! Then open 'Example Database.ods' and copy/paste the macro code to your own calc file.

-

To get to the bulk macro, go to 'Tools -> Macros -> Organize Macros -> Libreoffice Basic'. In the dialog box that opens up, click on the Calc document name, then double click on 'standard -> ExportJSON' and then click once on 'main'. Then click on the 'edit' button. This will open the Libreoffice Basic edit window.

-

Before the macro is run the following variables need to be adapted:

'****************************************************************

'

' ADAPT the following contants before starting the JSON export

' procedure.

'

'****************************************************************

Const START_ID = 1002000100

'Which sheet to export, the numbering starts at 1

Const SHEET = 1

'Last column to be exported. Numbering starts at 1 (as shown in Calc)

Const LAST_COLUMN = 9

'First and last row to be exported. Row numbers as shown in Calc!

'(i.e. first line would be 1 and NOT 0!)

Const FIRST_ROW = 1

Const LAST_ROW = 32

Const OUTPUT_FILENAME = "~/Desktop/json-export-data.txt"

-

Execute the macro by putting the cursor into the 'main' function and then by clicking on the execute icon.

-

After the macro has run, inspect the created JSON file. The first array at the top must contain the field names which have been taken from the first line of the Calc/Excel file.

Here's how the beginning of the JSON file for the demo database looks like:

{

"0": {

"0": "1002000100",

"1": "<p><b>Doc ID</b></p>",

"2": "<p><b>Title</b></p>",

"3": "<p><b>Title Notes</b></p>",

"4": "<p><b>Author</b></p>",

"5": "<p><b>Cross Reference</b></p>",

"6": "<p><b>Format - Paramétrage</b></p>",

"7": "<p><b>Notes on Content</b></p>",

"8": "<p><b>Relates to</b></p>",

"9": "<p><b>Number of Systems</b></p>"

},

"1": {

"0": "1002000200",

"1": "<p><b></b></p>",

[...]

Note: The HTML formatting (<p>, <b>, etc.) is present because the first row of the Calc sheet is formatted in bold. The HTML tags will automatically be stripped of during the bulk import process on the server side.

Importing the Bulk Data to the DRDB Database

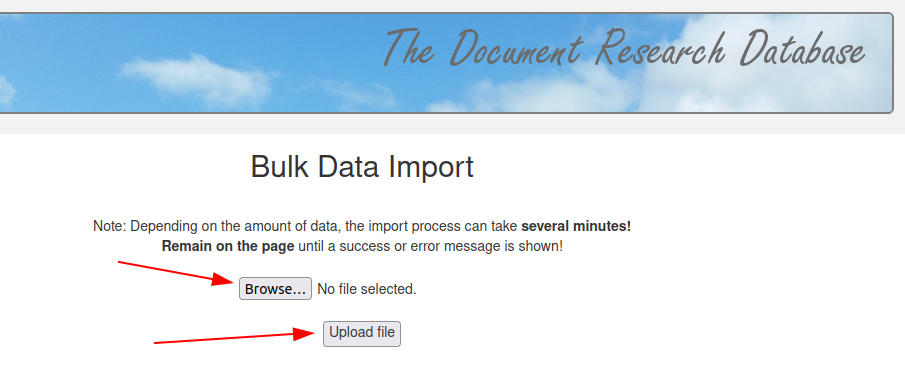

Now open the import page of the database by clicking on the "Administration" icon in the main page of the database and then click on the 'Bulk Import' icon. Select the JSON file with the "Browse" button and then press the 'Upload' button to start the import.

Note: Depending on the amount of data, importing data could take quite some time. 400 records with a total JSON file size of 1 MB could take around 5 minutes to import. To see how the system processes the data, open a shell window on the server and do a 'tail -f' on the log file.

Success or Failure Indication

Before data is imported, DRDB validates that the number of columns matches the number of fields of the database, that the field names match and that the record numbers in the JSON data do not yet exist in the database. If any of these conditions are not fulfilled, no data is imported and an error message is shown.

The following screenshot shows how DRDB reports that some records in the JSON data already exist in the database:

The following screenshot shows how DRDB reports that two field names in the database do not match the names of the rows in the JSON file.

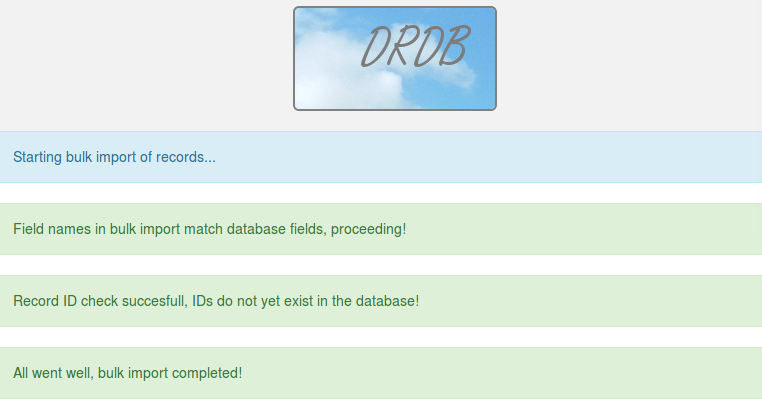

The following screenshot shows how DRDB reports successful data import.

Large Bulk Data Import

While the web interface allows importing bulk data, the maximum amount of data that can be processed is currently limited depending on the web server settings. A Docker installation with an nginx reverse proxy front end is limited to around 2 MB of json formatted data. Also, the http connection may time out before the import has finished. When a timeout error is shown, check on the server if the import is still running in the background. This is definitely an area that needs to be improved in a future software enhancement.

In the meantime, the following 'hack' could help to import a large amount of data:

-

Copy the json formated bulk import text file into the root directory of the drdb Docker container (or to a directory on a web server for non-Docker installations)

-

Modify

/var/www/html/drdb/content/bs_bulk_import_process.phpas follows:

// Get the bulk data from the POST variable and convert from JSON

// format to an associative array

//$bulk_data_JSON = $_POST['bulk'];

$bulk_data_JSON = file_get_contents('/bulk-data-to-import.txt');

-

Note: Comment out the

$_POSTline and add thefile_get_contentsline to read from the file instead of from the web page input. -

Once done, go back to the bulk import page, leave the bulk import text box empty and just press the 'Submit' button.

-

The import process will then use the bulk-data-to-import.txt file.

-

Note: The web front-end might show a timeout failure if the process takes too long. In practice, super large bulk mports can take an hour or more and still finish successfully, despite the timeout error shown on the web frontend. There is no timeout on the back-end however, and all data will eventually be imported. The only way to see when the process is finished is to watch the CPU utilization on the server.